Build Your Own GitHub Copilot II

This post is a follow-up to my previous post on fine-tuning an LLM to your own codebase: Build Your Own GitHub Copilot. You don't have to read that one to follow along here, but it would help if you do.

In my previous experiment, we found that fine-tuning an LLM on a codebase can yield some interesting gains in code completion accuracy. Because I had access to quite limited compute (a single RTX 4090), and I wanted to see how far you could go with relatively modest resources, the scale of the experiment was quite limited: small datasets (~40k rows train, ~1k test), small number of iterations, etc. Even at this limited scale, the results were quite promising, we saw a 47% increase in exact match for fill-in-the-middle code completions.

In this post, we'll kick things up a notch. Nothing too crazy, because at the end of the day, I'm still GPU poor, and renting GPUs can burn a hole in your pocket before you can say "backpropagation".

The goal is to take the experiment to its logical conclusion, to answer the original question I set out to answer: can you get a small, fine-tuned model to perform on-par with commercial products like GitHub Copilot, in a real-world test in an actual IDE? Let's find out.

The Fine-tuning Setup

An overview of the fine-tuning setup:

- Data: SAFIM (Syntax-Aware Fill-In-the-Middle) samples drawn from Pebble OS repository recently open-sourced by Google. (Which means that even the base model has probably not seen this code during pre-training.)

- Model: Qwen2.5-Coder-7B.

- GPU: H100 SXM, 80 GB VRAM.

- Training library: torchtune.

- Eval/inference library: vLLM.

- Eval metrics: Exact Match, CodeBLEU, Corpus BLEU, and the final, most important test: my vibes using the model on a real world coding task in the Pebble repo, in VS Code with continue.dev.

We'll discuss these choices in detail in the following sections.

I. The Data

In my last post, I used a Svelte codebase to draw SAFIM samples. While a decent choice because of it's relative niche nature, Svelte is not supported language in CodeBLEU, an evaluation metric that takes into account code structure for measuring the closeness of a prediction against the ground truth.

So I decided to switch to a codebase written in C, and since I'd heard about Google open-sourcing the Pebble source code only a few days ago, I thought it would make a fine choice for this experiment. Some basic stats about the repo:

Number of C source files, excluding third party code:

pebble on main [!] via v3.12.3

❯ find -type f -name "*.c" ! -wholename "./third_party*" | wc -l

1820Number of lines of code:

pebble on main [!] via v3.12.3

❯ find -type f -name "*.c" ! -wholename "./third_party*" -print0 | wc -l --files0-from=- | tail -1

1095271 total

So we're dealing with 1820 source files and 1M lines of code. Should make for a decent test.

I wrote a dataset generator in Rust to generate SAFIM samples from any given source repo. It parses each source code using tree-sitter, finds relevant AST nodes to mask out, and generates (prefix, suffix, middle) samples. Here's the result:

❯ time cargo run -- --root /home/praveen/workspace/pebble --file-types c --test-sample-rate 0.02 --exclude-dirs third_party

Finished `dev` profile [unoptimized + debuginfo] target(s) in 0.20s

Running `target/debug/datagen --root /home/praveen/workspace/pebble --file-types c --test-sample-rate 0.02 --exclude-dirs third_party`

Parsed: (1820/1820) files.

Train: 1778 files, 294215 samples.

Test: 42 files, 7920 samples.

Average prefix length: 3481.2473

Average suffix length: 2481.9915

Average middle length: 179.14487

real 0m2.639s

user 0m32.312s

sys 0m2.395sSo our training set has 294k samples, while our test set has 7.9k. The test set is generated from a random 2% holdout of source files in the repo.

The full dataset on HuggingFace: prvnsmpth/pebble-fim-dataset.

II. The Model

Last time, we used Unsloth's 4-bit quantized version of the Qwen 2.5 Coder 14B model. This time, I decided to use a smaller model – the 7B version of the same model, unquantized.

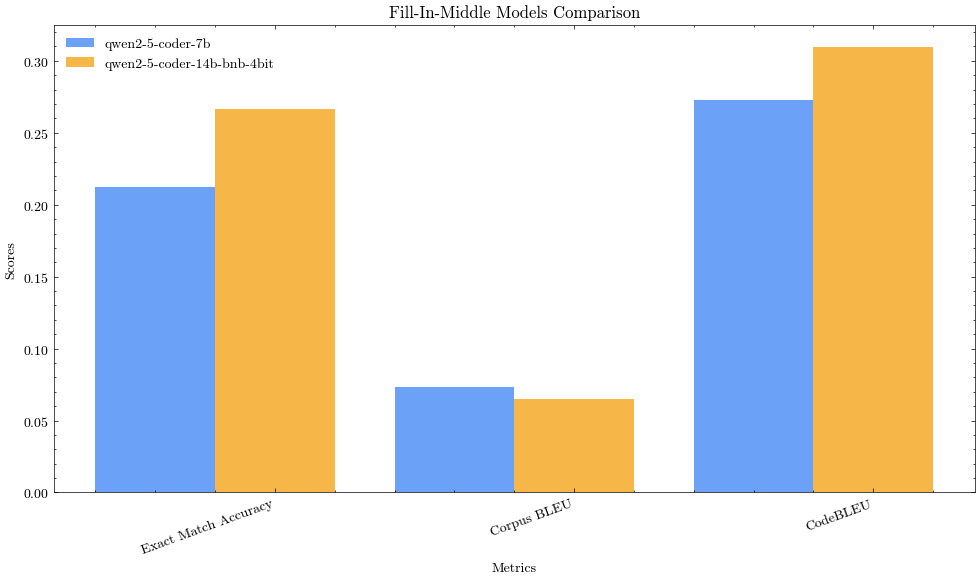

"Whatever happened to kicking things up a notch?" I hear you ask. And you would be right to question the choice, except that I discovered that the 7B model is within striking distance of the 14B version. A comparison of the two models on our test set:

The 14B is better, but not by a huge margin. Using a smaller model has obvious benefits: faster to train, faster to run inference and evals. And I was confident we could get the fine-tuned 7B to outperform the 14B, so I decided to go ahead with the former.

Here are the baseline metrics for the 7B model, the numbers to beat:

| Metric | Value |

|---|---|

| Total | 7920 |

| Exact Matches | 1679 |

| Exact Match Accuracy | 0.211995 |

| Corpus BLEU | 0.073497 |

| CodeBLEU Metrics | Value |

| CodeBLEU | 0.273235 |

| N-gram Match Score | 0.031706 |

| Weighted N-gram Match Score | 0.120532 |

| Syntax Match Score | 0.416179 |

| Dataflow Match Score | 0.524525 |

III. Training

I could write a whole series of separate blog posts about all the issues and error stack traces I ran into trying to run a fine-tune over the entire training set of ~294k samples. Some of the things I tried:

- Considered multi-gpu training – switched to torchtune from Unsloth (because the latter doesn't yet support it).

- Realized that torchtune doesn't support DDP, and FSDP doesn't make sense for such a small model.

- Experimented with tweaking the learning rate to get faster convergence, but it didn't actually go as intended. Ended up wasting $20 of GPU compute on an overnight training run where the loss exploded after 100 iterations.

Finally, after some trial-and-error, I settled on the following setup that worked extremely well:

- Single GPU, H100, 80 GB

- Effective batch size 128 (batch size 8 * 16 grad accumulation steps)

- Dataset packing (this one was particularly effective).

- Use torch.compile.

With this, I was able to train on the first 25% of the dataset in approx. 3 hours. Training cost: $6.

IV. Evaluation

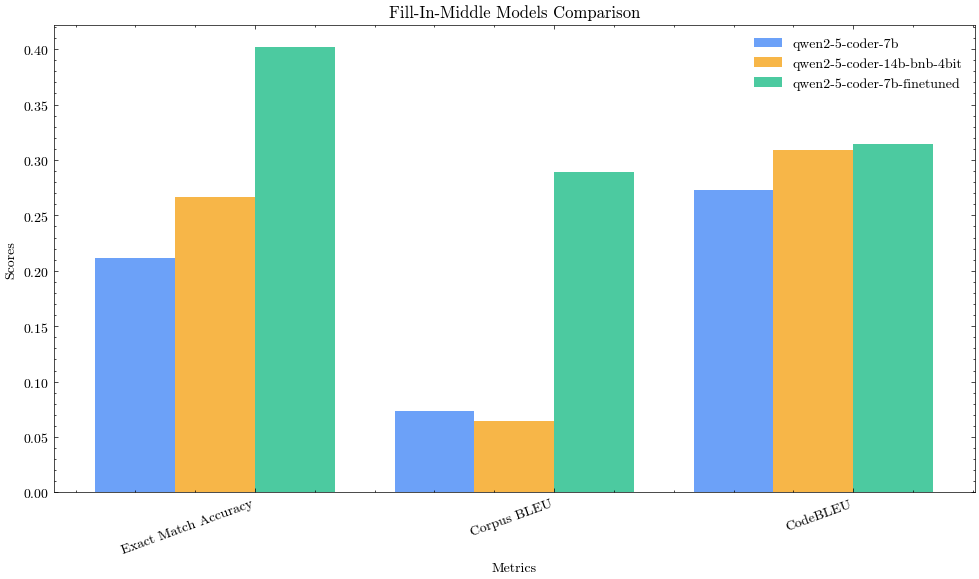

So what does $6 and 3 hours of fine-tuning get you in terms of metric improvements? Well, quite a lot:

Exact match accuracy saw an 89% improvement relative to the baseline 7B model, and 50% over the 14B model. The n-gram based corpus BLEU metric has more than tripled.

Curiously, the CodeBLEU metric only saw a very marginal improvement – which doesn't make sense. The model is producing exact matches about twice as often, you'd expect to see a larger change. Not sure what's going on here.

Full comparison of metrics against the baseline:

| Metric | Qwen2.5-Coder-7B | Qwen2.5-Coder-7B-Finetuned |

|---|---|---|

| Total | 7920 | 7920 |

| Exact Matches | 1679 | 3180 |

| Exact Match Accuracy | 0.211995 | 0.401515 |

| Corpus BLEU | 0.073497 | 0.289182 |

| CodeBLEU Metrics | Baseline | Finetuned |

| CodeBLEU | 0.273235 | 0.314254 |

| N-gram Match Score | 0.031706 | 0.148936 |

| Weighted N-gram Match Score | 0.120532 | 0.149482 |

| Syntax Match Score | 0.416179 | 0.467262 |

| Dataflow Match Score | 0.524525 | 0.491335 |

Interestingly, the CodeBLEU dataflow match score actually decreased, even though we have a model that's producing exact matches about twice as often. Something is off with the dataflow metric here, and it needs a closer look.

V. The Moment of Truth: How Good is it in Action?

We finally move on to perhaps the most important metric when it comes to code completion assistants: vibes.

Metrics and benchmarks look pretty on a blog post – but what we really want to know is if this model is any good in real world use, on our chosen codebase.

So, we'll pit this model against GitHub Copilot (running gpt-4o) on the Pebble project in VSCode. Here's a screencast of my evaluation of the two:

David v Goliath

I think it's pretty clear. Our model – a 7B parameter David – can easily hold its own against the 200B parameter gpt-4o Goliath. (EDIT: I've since learned that Copilot uses gpt4o-mini for free users – kinda obvious in retrospect – but my point still stands.)

And it's not entirely surprising. The model has learnt patterns of API calls within the codebase in its training, and since a lot of the time (98% of the time in our case, the proportion of training set files) we'll be running inference on training set files, our model is expected to do quite well. Even better than Copilot, which has very little knowledge of the rest of the codebase and relies entirely on RAG to pull in relevant context.

Conclusion

In summary, I think training and deploying your own coding assistant is very much a viable option. Certainly so, if your organization is reluctant to use Copilot and similar solutions on proprietary code.

Another aspect of private coding assistants that I haven't directly touched upon also happens to be one of the top complaints developers have about AI code completion assistants – they can be too slow at times of high load. A privately deployed 7B param model on an org's private infrastructure, serving completions to only its developers, means that they no longer have these issues of contending with the rest of Copilot's customers for inference bandwidth. I'll probably explore this subject of inference hardware requirements in a future post.